bagging machine learning explained

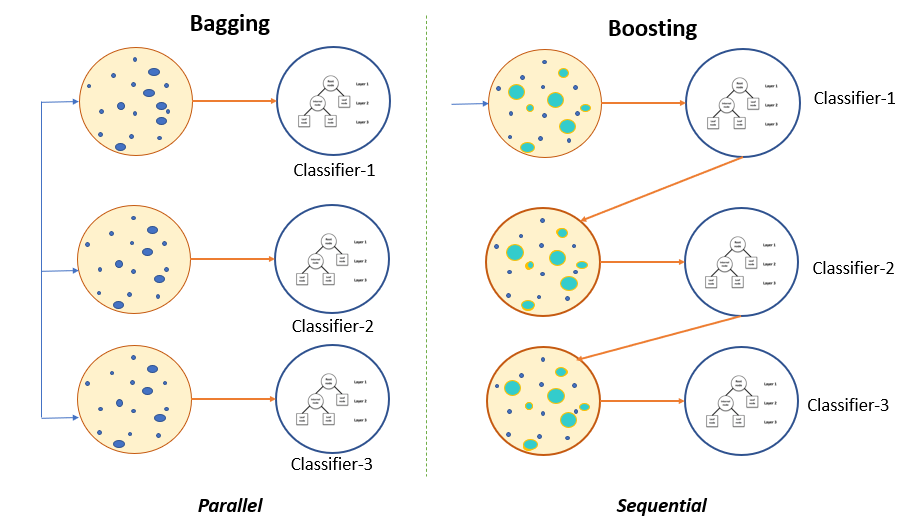

Boosting and Bagging Boosting. An important part but not the only one.

A Bagging Machine Learning Concepts

Basically its a new architecture.

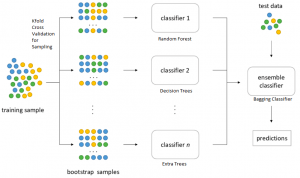

. It is the ratio of test data to the given dataFor example setting test_size 04 for 150 rows of X produces test data of 150 x 04 60 rows. Ensemble learning is a machine learning paradigm where multiple models often called weak learners are trained to solve the same problem and combined to get better results. Random forest one of the most popular algorithms is a supervised machine learning algorithm.

Bagging is used in both regression and classification models and aims. - Selection from Hands-On Machine Learning with Scikit-Learn Keras and TensorFlow 2nd Edition Book. The Machine Learning Landscape.

Linear Regression tends to be the Machine Learning algorithm that all teachers explain first most books start with and most people end up learning to start their career with. One Machine Learning algorithm can often simplify code and perform better than the traditional approach. It is a very simple algorithm that takes a vector of features the variables or characteristics of our data as an input and gives out a numeric continuous outputAs its name and the previous explanation outline it.

Through a series of recent breakthroughs deep learning has boosted the entire field of machine learning. 90L 4L 60L 4L 90L 60L The train_test_split function takes several arguments which are explained below. It creates a forest out of an ensemble of decision trees which are normally trained using the bagging technique.

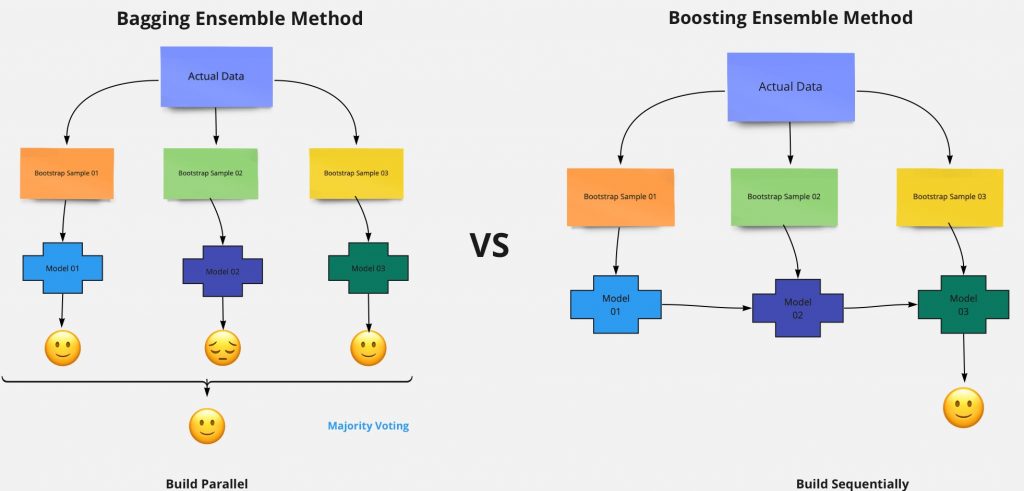

Machine Learning Models Explained. A popular one but there are other good guys in the class. Boosting is a Ensemble learning meta-algorithm for primarily reducing variance in supervised learning.

Complex problems for which using a traditional approach yields no good solution. Now even programmers who know close to nothing about this technology can use simple. Machine Learning is a branch of Artificial Intelligence based on the idea that models and algorithms can learn patterns and signals from data differentiate the signals from the inherent noises in.

Random Forest is one of the most popular and most powerful machine learning algorithms. Lets put these concepts into practicewell calculate bias and variance using Python. Second-Order Optimization Techniques Chapter 5.

Machine learning model performance is relative and ideas of what score a good model can achieve only make sense and can only be interpreted in the context of the skill scores of other models also trained on the same data. It is seen as a part of artificial intelligenceMachine learning algorithms build a model based on sample data known as training data in order to make predictions or decisions without being explicitly. It is basically a family of machine learning algorithms that convert weak learners to strong ones.

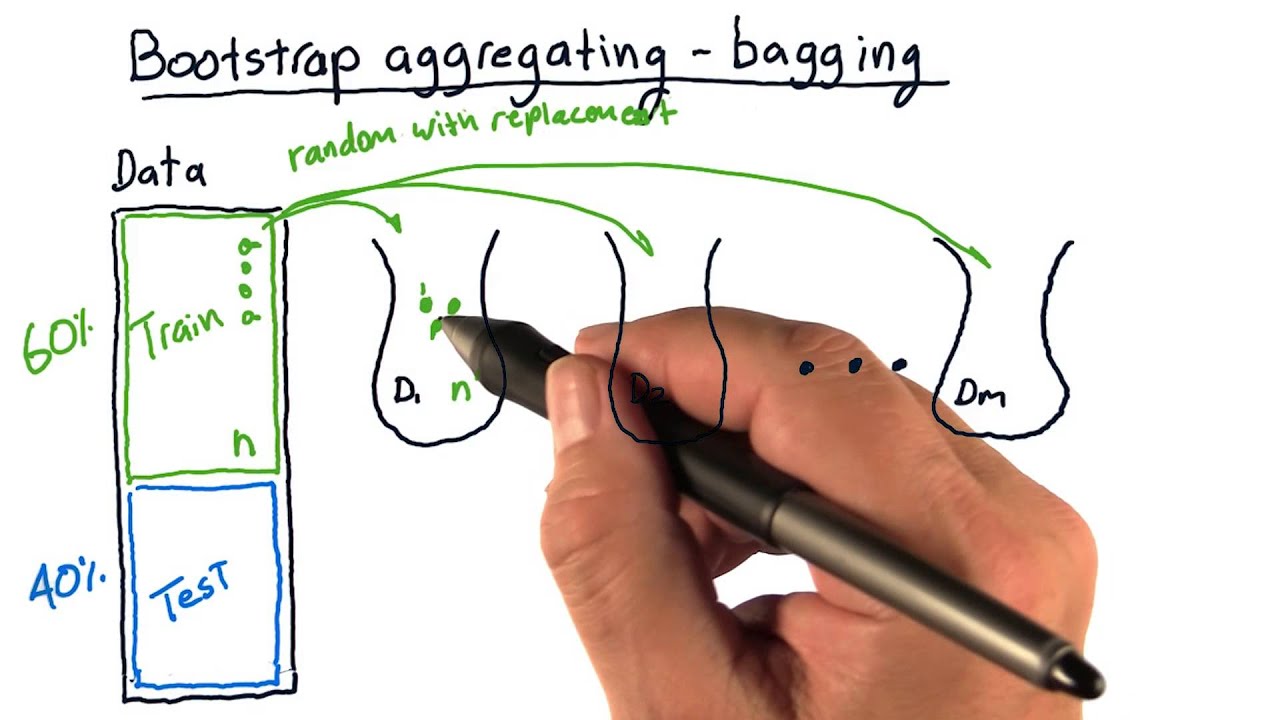

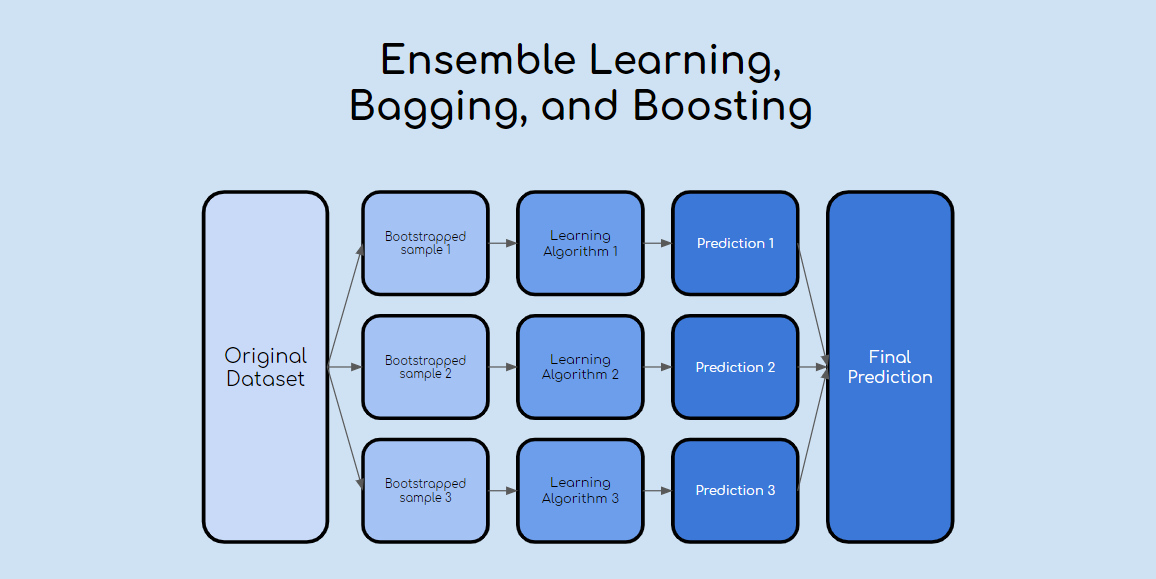

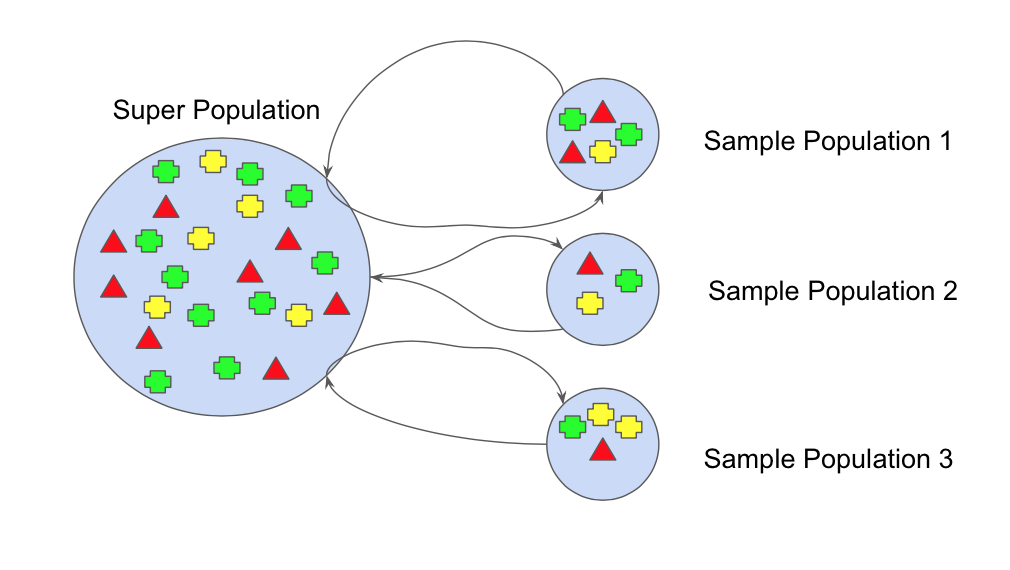

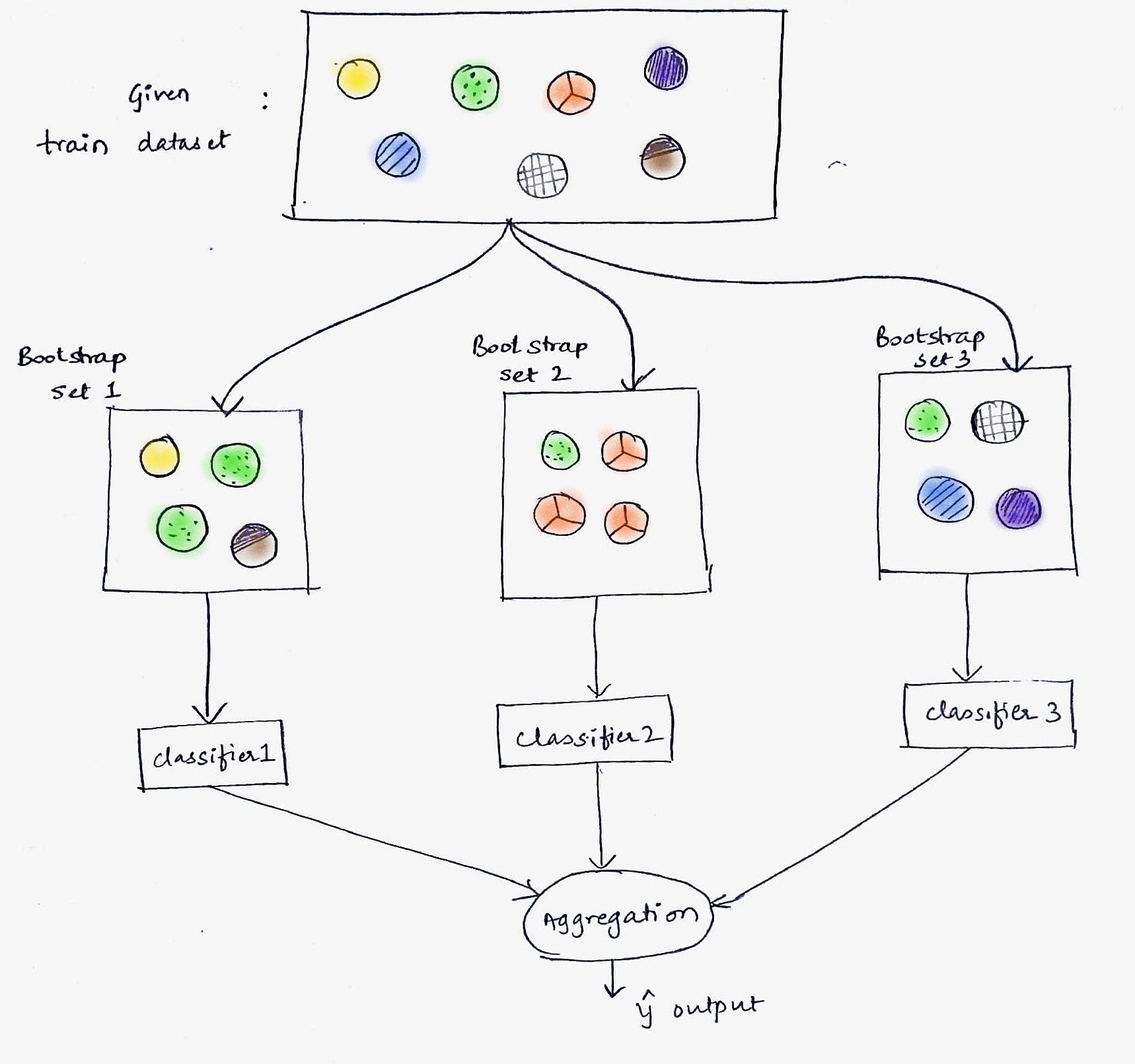

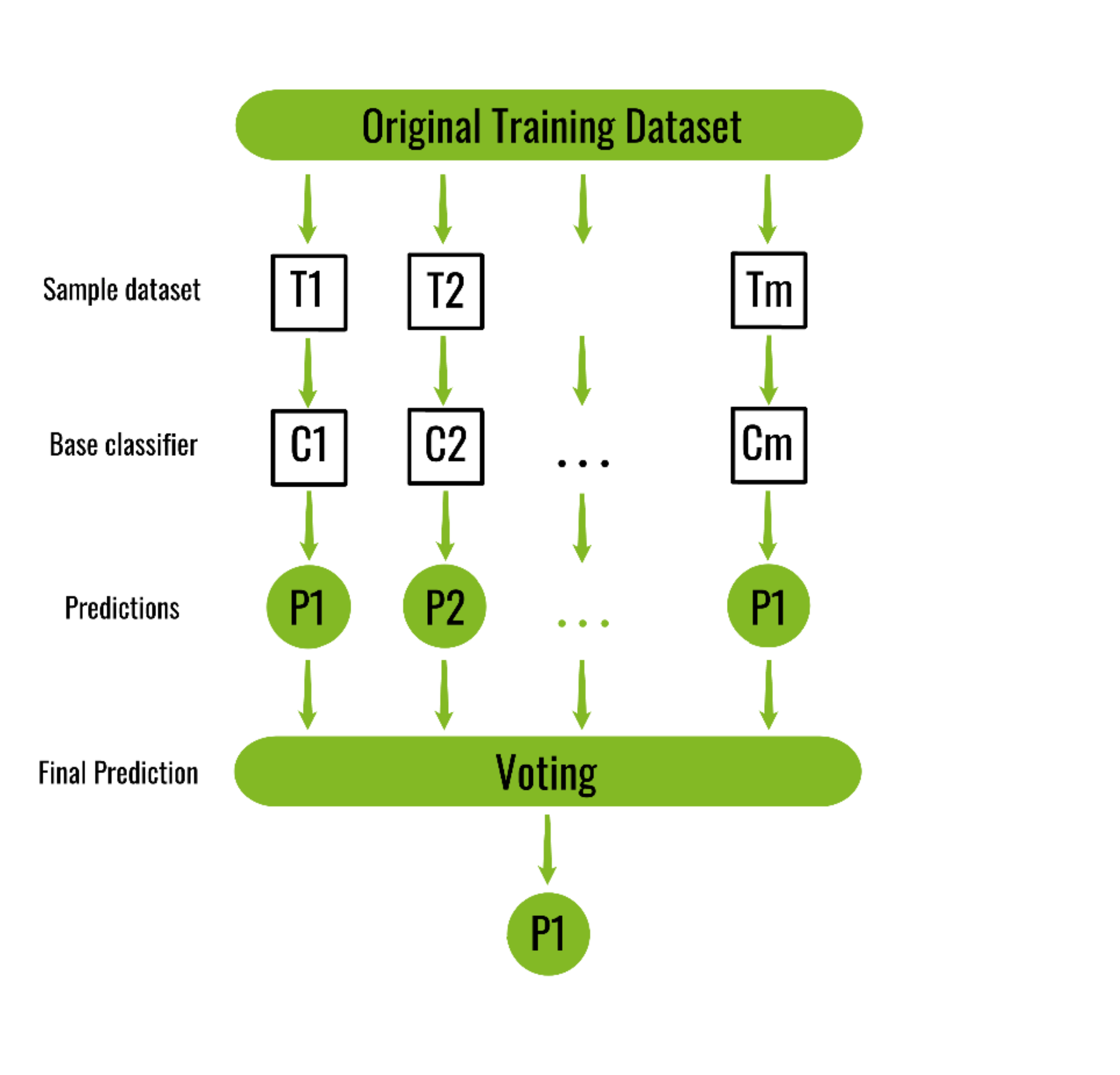

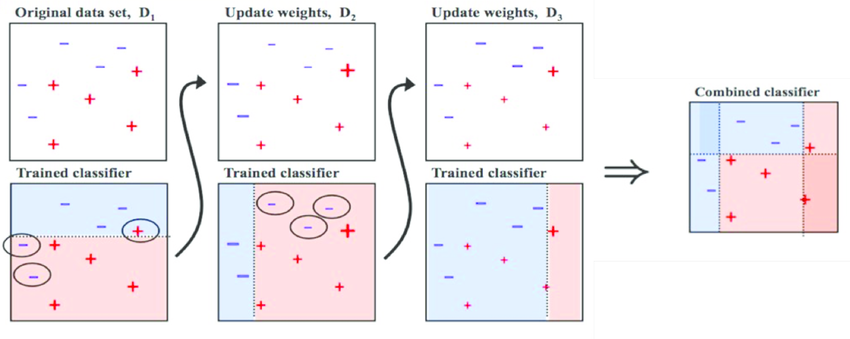

Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regressionIt also reduces variance and helps to avoid overfittingAlthough it is usually applied to decision tree methods it can be used with any. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. The main hypothesis is that when weak models are correctly combined we can obtain more accurate andor robust models.

Boosting is a method of merging different types of predictions. Deep Learning is a modern method of building training and using neural networks. Machine learning ML is a field of inquiry devoted to understanding and building methods that learn that is methods that leverage data to improve performance on some set of tasks.

Notes Exercises and Jupyter notebooks Table of Contents A sampler of widgets and our pedagogy Online notes Chapter 1. Photo by Pixabay from Pexels Decision Trees. Arthur Samuel a pioneer in the field of artificial intelligence and computer gaming coined the term Machine LearningHe defined machine learning as a Field of study that gives computers the capability to learn without being explicitly programmedIn a very laymans manner Machine LearningML can be explained as automating and improving the learning process of.

As we said already Bagging is a method of merging the same type of predictions. With the help of Random Forest regression we can prevent Overfitting in the model by. Introduction to Machine Learning Chapter 2.

Machine Learning is a part of artificial intelligence. The bagging methods basic principle is that combining different learning models improves the outcome. Boosting decreases bias not variance.

Neural Networks are one of machine learning types. Learn about some of the most well known machine learning algorithms in less than a minute each. In this article I have covered the following concepts.

Because machine learning model performance is relative it is critical to develop a robust baseline. Machine Learning is great for. First-Order Optimization Techniques Chapter 4.

The simplest way to do this would be to use a library called mlxtend machine learning extension which is targeted for data science tasks. Bias variance calculation example. Customer Churn Prediction Analysis Using Ensemble Techniques in Machine Learning.

Machine Learning Algorithms Explained in Less Than 1 Minute Each. Problems for which existing solutions require a lot of fine-tuning or long lists of rules. Random forest uses Bagging or Bootstrap Aggregation technique of ensemble learning in which aggregated decision tree runs in parallel and do not interact with each other.

Decision trees are supervised machine learning algorithm that is used for both classification and regression tasks. These are the feature matrix and response vector which need to be split. Bagging decreases variance not bias and solves over-fitting issues in a model.

Linear Regression Chapter 6. This library offers a function called bias_variance_decomp that we can use to calculate bias and variance. After reading this post you will know about.

Bagging is also known as Bootstrap aggregating and is an ensemble learning technique. Zero-Order Optimization Techniques Chapter 3. Moreover these ML projects for beginners can be executed by using ML algorithms like Boosting Bagging Gradient Boosting Machine GBM XGBoost Support Vector Machines and more.

Bagging Decision Trees Clearly Explained. By Nisha Arya. In these types of machine learning projects for final year.

In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling.

Ensemble Learning Bagging With Python Tirendaz Academy Mlearning Ai

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Guide To Ensemble Methods Bagging Vs Boosting

Learn Ensemble Methods Used In Machine Learning

Ensemble Learning Bagging Boosting

Influenza Petal Talented Bagging Rfafrontino Com

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging Classifier Python Code Example Data Analytics

Bagging Algorithms In Python Engineering Education Enged Program Section

Boosting And Bagging How To Develop A Robust Machine Learning Algorithm Hackernoon

Ml Bagging Classifier Geeksforgeeks

Boosting And Bagging Explained With Examples By Sai Nikhilesh Kasturi The Startup Medium

Bootstrap Aggregating Wikiwand

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

What Is Bagging In Machine Learning And How To Perform Bagging